You know what, that’s a gem, that’s a really good guess ![]() .

.

The collision point is randomly distributed between [elbow, elbow-1] because its based on the timing of the collision and the sensor reading, which we cant control.

bogo does so well because the distance between any 2 random points on a line is 1/3. Guessing the middle point gets you down to an average error of only +/- 1/4.

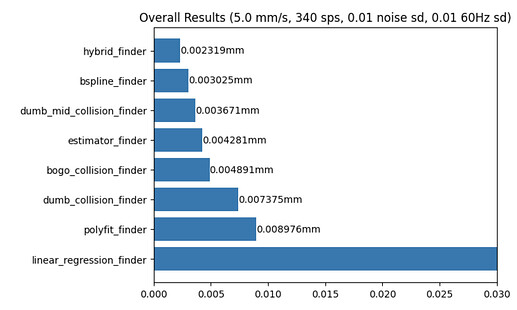

Guessing like this is using only the elbow point. It just feels like there should be some way to use the rest of the points to make a guess. So that’s why I’m looking bspline, its the only one so far that can outperform a guess. I haven’t tried it in the printer yet but I have high hopes.

I also haven’t gotten there yet but stacking is worth trying. If linear regression comes up with an answer outside the [elbow, elbow-1] range, try bspline and if that also fails just guess the middle. That would curtail the worst of the outliers but preserve the good performance in linear and “near linear” conditions.

I’m busy adding the elbow finder testing as well…