At the moment I am writing a program that collects accelerometer readings from certain kinematic movements. The movements are quite short, and after each of them I should receive measured information from the accelerometer. During the end of a movement, which lasts for example 1000ms, at least 300+ ms passes before I get the timing I need for the end of the movement in the accelerometer samples. I tried to figure out the reason for these delays, but could not reduce them in any way. In my opinion the worst case delay should be equal to BATCH_UPDATES which is 100ms by default.

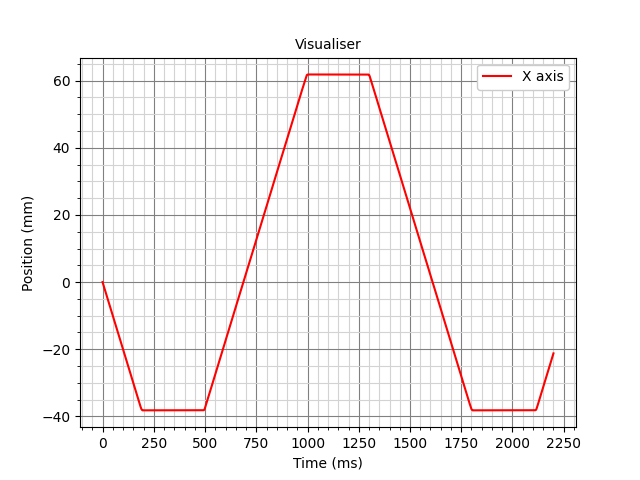

I wrote a small extra that simulates this defect. It moves the print head 10 → 50mm on X, and at each turn it waits for the measurements to arrive from the accelerometer.

You can add it to your extra and specify it in the configuration:

[accel_timing_test]

accel_chip: adxl345

The program shows the planned start and end timings of the movement, which is also the measurement timings:

/ measure_start_time: 18.1151011475

measure_end_time: 18.61908726

In “real time”, shows callbacks of accelerometer data in handle_batch(), comparing actual print_time with the last sample_mcu_time from batch:

// print_time: 18.61908726, sample_mcu_time: 17.941941, delta: 0.6771462600000007

// print_time: 18.61908726, sample_mcu_time: 18.043266, delta: 0.5758212600000014

// print_time: 18.61908726, sample_mcu_time: 18.141421, delta: 0.47766625999999945

// print_time: 18.61908726, sample_mcu_time: 18.242744, delta: 0.3763432600000023

// print_time: 18.61908726, sample_mcu_time: 18.344068, delta: 0.27501926000000054

// print_time: 18.769216634652995, sample_mcu_time: 18.442217, delta: 0.32699963465299575

// print_time: 18.80201592, sample_mcu_time: 18.543531, delta: 0.25848491999999723

// print_time: 18.9030213425, sample_mcu_time: 18.644842, delta: 0.2581793425000001

In principle, I use a self-written handle_batch() only because it displays additional information.

And shows final the line:

// now_print_time: 18.9077851925, last_mcu_time_in_batch: 18.644842, delta: 0.26294319249999987, get_sample_delta: 0.28869793249999987

Where

now_print_time - current print time

last_mcu_time_in_batch - The time of the last measurement in the incoming batch, which exceeded measure_end_time, which allowed us to complete the wait for data from the accelerometer.

delta - comparison now_print_time with last_mcu_time_in_batch

get_sample_delta - comparison now_print_time with measure_end_time, the full time from the end of move and receiving data.

I also consulted @nefelim4ag about this problem, but we did not come to any conclusion, and I decided to ask this question here.

Any edits and suggestions. Thanks.