@Sineos What about your answers am I not reading? I do understand what you are saying about the issue. I will refrain from to reduce the size of my responses and will address everything here to make sure you understand that I have read what you have written both in this post, and in the previous posts you have referenced. If you read my response to the posts from @hcet14 I had already responded to the issues in the “timer too close” post. Referencing the entire page again doesn’t address my initial answers to that suggestion as I thought I had been explicit in answering the concerns raised.

Timer too close

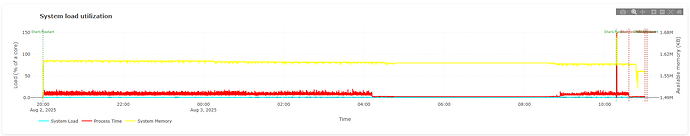

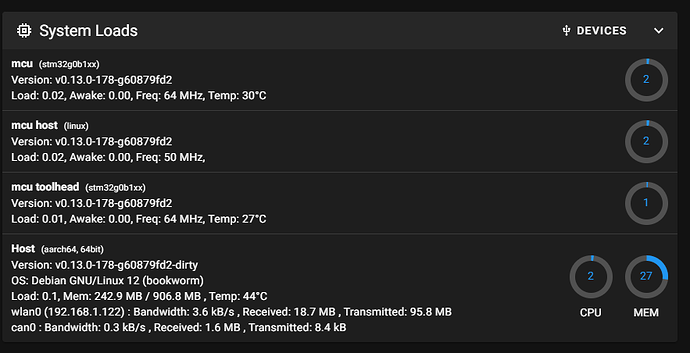

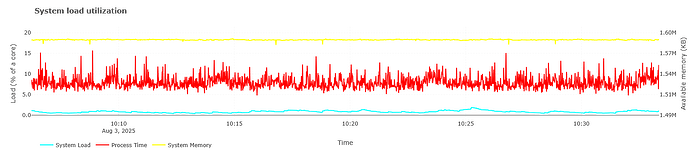

1- High CPU or system load on the host - CPU utilization on the host is less than 50% so I don’t think this applies, but please correct me if I’m wrong.

2-High disk activity or SD card issues - The OS is installed on the eMMC. I don’t have read/write stats on it’s usage, but again I don’t think this applies but correct me if I’m wrong.

3-Memory swapping due to low free RAM - Similar to issue #1, utilization is less than 50% so I’m assuming this isn’t an issue. Again, please correct me if I’m wrong.

4-Overheating and CPU throttling - Just like the previous issues, I’m not seeing any overheating on the M8P. It’s in a separate enclosure with forced air cooling that is separate from the printer enclosure. From what I see temps never get above 60c. The EBB board is in a 50c enclosure so it will be “hot” but since it’s not involved in this error it should be able to be discounted.

5-Undervoltage conditions - No under volt messages are present.

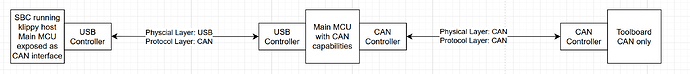

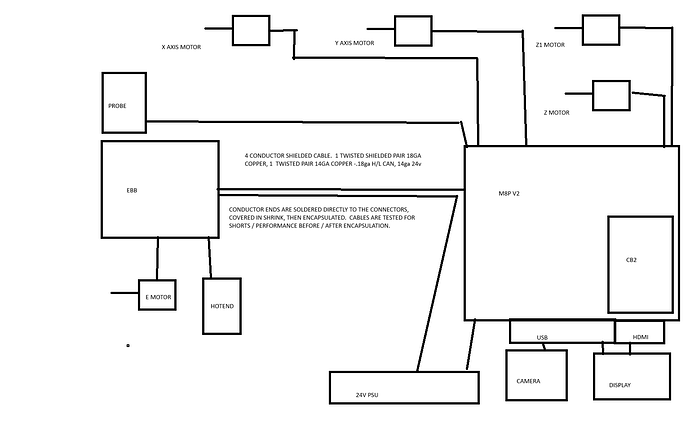

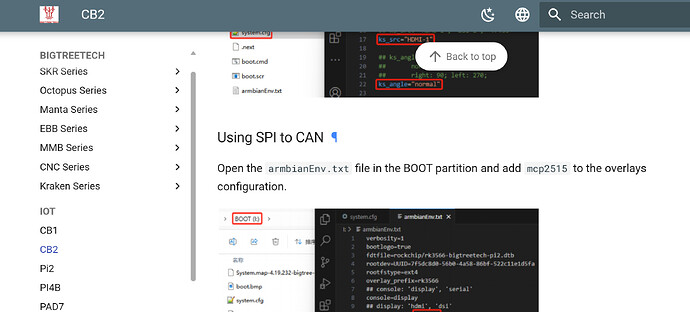

6-USB, UART, or CAN wiring faults-Since this branch of the CAN network consists of the USB connection between the CB2 and the M8P any wiring fault would be a hardware fault on either/both boards. This is unrealistic for me to troubleshoot and since they are installed in an enclosure and stable, flexing from thermal expansion would be the only cause of an intermittent fault. This is a possibility but there is no correlation between load/temps and the errors.

7-ElectroMagnetic Interference (EMI) - Again, a possibility, but since the boards are in a grounded enclosure separate from the PSU and at least 2m away from any other machinery, the boards themselves would need to be the ones putting out the EMI. Which would indicate a much more severe defect than a broken trace/pin. Again, please correct me if I’m wrong. None of the other equipment in the room was powered on when the error occurred.

8-Conflicting USB devices (e.g. webcams, displays, hubs) There is a USB camera and a touch display connected to the board via USB/HDMI. The error has occurred when there was no USB devices connected. I’m happy to disconnect the camera and/or the touchscreen and let the error happen again to prove if necessary. This is another possibility, but the error has occurred with and without these devices connected, so I’m skeptical at best this is the root cause.

9-Incorrect firmware Clock Reference setting - The devices are working correctly normally. An incorrect clock reference would not function at all.

10-Running Klipper inside a Virtual Machine (VM) - NA

11-Poorly written macros or command spam from slicers - no special macro are used that could possibly cause this. Errors happen with files that also print successfully. Errors happen with small and large prints, there is nothing consistent about gcode files that causes the error. Files from multiple slicers have caused the error.

12-Unofficial modules or patched Klipper forks - The CB2 requires the BTT fork. This is my guess at the root cause. I have another CM4 coming, hopefully soon, to try an official klipper installation.

If there is something else in that post I’m missing, please enlighten me.

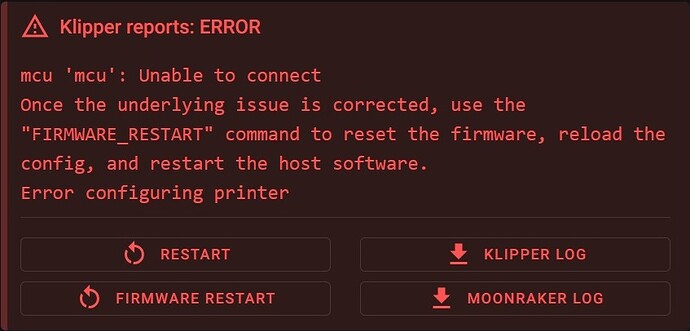

Reiteration of the error as I understand:

Klipper was expecting a response from the M8P. Sometime between line #51702 and #51703 in the log the M8P stops communicating and the re-transmits start to build up. At line #51705 klipper’s timout has been reached and it reports the failure to communicate with the M8P. Based on these observations, the assumption is that the M8P has failed in a way that it completely stops communicating. When the host is rebooted any messages sent to the M8P to establish a connection are left with no response. The only way to break the M8P out of whatever state it’s in is to power cycle it, forcing it to reboot.

To troubleshoot this I will need to determine what’s happening to the M8P that causes it to stop communicating with the host. I have attempted to answer everyone’s questions and work up to where we are. I was aware going into this that something was causing the M8P to “lock up” and stop responding.

I did not come here before troubleshooting things myself. Knowing that I would need to calm the claims about my wiring to the EBB I ensured that there were absolutely no issues with the rest of the CAN network. The firmware is fully updated to Version: v0.13.0-190-g5eb07966b.

@mykepredko I’m not sure what you’re seeing in the log with that spike. I don’t see that at all even when I zoom in on that section.

To run through the rest of your questions/concers:

Maybe you’ve added hardware to your host like cameras that could be causing a problem, we don’t have visibility to that – There is a USB webcam and a USB touchscreen connected currently. This error has occurred in the past with none of those devices attached. I’m more than happy to remove them and wait for the error to happen again, then post the logs again.

You aren’t forthcoming about your wiring; if you can’t share that information because of an NDA then that sounds fishy to me as you’re doing something unusual – I have tried to be completely forthcoming about the setup and the specifics down to gauge sizes of the individual conductors. If you need me to post it again, I’m happy to but I fully described the harness both to @cardoc and again to @Sineos. The NDA covers publicly posting pictures of hardware. I don’t personally think there is anything proprietary about the cables, but I don’t decide what’s covered in the NDA. The only reason I can think that a picture of the physical cabling is needed is that you don’t believe my statements about it. Never mind the fact that this error has NOTHING to do with the CAN wiring harness connecting the M8P to the EBB.

There doesn’t seem to be any experimentation done to characterize the problem.

You have pointed to the error message but I haven’t seen any work done to try and reproduce the error or make it occur sooner - years ago when I was doing failure analysis on product we’d call this “shortening the ‘scope loop”. – I have done 6 months of troubleshooting and testing. I have eliminated dozens of errors and issues that have cropped up, some blocking, some minor. This error occurs completely randomly. I am unable to cause it to happen more or less frequently. It will usually happen once in approximately 100-150 hours of printing. It usually happens with longer prints, but has happened during the first 5 minutes of a print. My conversations so far seem to be focused on getting people to accept that I have already done extensive troubleshooting and testing. If you have a specific suggestion on how to “shorten the scope loop” I’d love to hear it. That is after all, why I’m here.

Swapping out parts.

You keep going back to the CB1/M8P interface (because of the error message, to be fair) but the 100pin connector interface and USB Hub circuitry is remarkably robust and I don’t think I’ve ever seen a problem here. If I were in your situation and I was suspicious of the interface, I’d swap out the CB2, run tests with the new Host and, if there was still a problem, then I’d swap out the M8P and, if there were still problems: I’D LOOK ELSEWHERE. – I’m trying to find the “ELSEWHERE” to look. We have swapped out the M8P, EBB, and the CB2 with no resolution.

Less talk, more action. As I said above, there is more than 40 posts in this thread and I don’t see any experiments done to try and understand the problem.

When I have a problem to solve my approach is to hypothesize as to the causes - we’re helping with that but, due to the lack of understanding of your system, there’s only so much we can do.

Make up a list of the components in your system and look to see where there could be a problem and test it out. Make that component fail and see if it replicates your error. If it does, publish the results so we can comment and make suggestions. – I have thus far been trying to catch everyone else up to where I am with testing this. I’ll gladly do anything that I haven’t done already and post those results. I have been testing this system for months, stressing each component and finding out how it fails, how to fix/mitigate the failure, and repeating. I will post a full schematic when I have a chance to put one together.

Thanks for the suggestion on the klipper versions. I’ll do an update to the latest version and flash over the new versions to the MCUs. The error has occurred when all versions match, again, I’ll repost logs/errors when it happens again.

@cardoc The boards have been disconnected and reconnected multiple times with no noticed reduction on the error.