Troubleshooting Spontaneous SBC Reboots and Crashes in Klipper

This guide provides a structured approach to diagnosing and resolving system instability or sudden crashes with the Single Board Computer (SBC) used for your Klipper setup.

Background

Single Board Computers (SBCs) like the Raspberry Pi, Orange Pi, or BIGTREETECH Pi are the brains of a Klipper 3D printer. They run a full Linux operating system and the Klipper host software (Klippy), which does the heavy lifting of processing G-code and sending low-level commands to the printer’s microcontroller (MCU).

Hard crashes in this architecture almost always originate from the SBC and its operating system, not the Klipper software. Crashes caused by the Klipper host software itself are rare and typically do not take down the entire system.

Symptoms

From a user’s perspective, an SBC-related failure usually manifests in one of the following ways:

-

Sudden Print Failure: The print or current operation stops abruptly.

-

Unresponsive System: The web interface (Mainsail, Fluidd, etc.) becomes inaccessible, and SSH connections to the SBC fail or time out. Usually, only a power cycle will revive the system.

-

Spontaneous Reboot: The print fails, and after a minute or two, the web interface becomes accessible again. This often indicates the entire SBC rebooted.

-

Klipper Service Restart: The print fails, but the web interface reloads quickly. You might see an error message about Klipper being disconnected and then reconnected. This suggests only the Klipper host application crashed and was automatically restarted by the system’s service manager (

systemd), while the underlying operating system remained running.

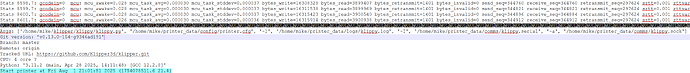

Interpreting klippy.log

The klippy.log file is crucial for understanding what happened. You can find it in your ~/printer_data/logs directory. In a hard crash scenario, the klippy.log will typically show the following:

-

The log shows normal

Statslines, and then it abruptly stops. -

There is no error message or clean shutdown notice.

-

You may see a line of garbage or null characters. This happens when the system crashes while the log file is being written to, causing corruption.

-

After the SBC reboots, the Klipper service starts again. This is why the log file continues with the full Klipper startup banner (

Args: ...,Git version: ...,Start printer at ...). -

There will be a significant time gap between the last

Statsline before the crash and the newStart printer at ...timestamp, corresponding to the time the SBC was offline.

Common Causes

Most spontaneous crashes can be traced back to a few common hardware and power-related issues.

-

Insufficient Power: Low-quality, unstable, or underpowered power supplies are the most common cause. This also applies to the USB cable between the PSU and the SBC. Use a high-quality, brand-name PSU with a rating appropriate for your SBC (e.g., 5.1V / 3A or higher for a Raspberry Pi 4). Use short, thick-gauge USB cables designed for power delivery, not just data transfer.

-

Back-Powering / Back-Feeding: This occurs when two actively powered devices are connected via USB, such as an SBC and the printer’s mainboard. One device can push power toward the other, leading to power instability. A common fix is to prevent the USB cable from carrying power by taping over the +5V (VCC) pin on the connector or carefully cutting the red wire inside the cable. Also, ensure your printer’s mainboard is configured via jumpers to receive power from its own PSU, not from USB.

-

Overheating: High CPU load or insufficient airflow can cause the SBC to overheat. This leads to thermal throttling (slowing the CPU to reduce heat) or a complete shutdown to prevent damage. Ensure adequate cooling. At a minimum, use heatsinks on the main processor. For enclosed printers or high ambient temperatures, an active cooling fan is highly recommended. Monitor the SBC’s temperature; values above 80°C are critical.

-

Failing SD Card: SD cards have a limited number of write cycles and are prone to corruption or failure, especially low-quality cards. This can lead to Input/Output (I/O) errors that crash the system. Use a reputable, high-endurance SD card (e.g., “Endurance” or “Industrial” grade). Regularly back up your

printer.cfgand other important files. If you suspect an issue, try a different, newly flashed SD card. -

Kernel or Driver Issues: The Linux kernel or its drivers can have bugs that crash the entire system. These “kernel panics” are difficult for an average user to diagnose and fix. Searching online for any kernel panic messages you find in the logs may provide hints about the cause.

-

Additional Hardware: Any device connected to the SBC (webcams, displays, etc.) can introduce instability due to defects, buggy drivers, or poor design. When diagnosing hard crashes, disconnect all non-essential hardware and stress-test the bare system to help isolate the problem.

-

Klipper Software Bugs: While possible, bugs in the Klipper host software are extremely unlikely to crash the entire operating system. A Klipper bug will typically cause the

klipperservice to fail, butsystemdwill restart it automatically. The SBC itself will remain responsive. -

Hardware Defects: Though less common, a faulty SBC, a problematic USB device, or a poorly shielded cable can cause system instability.

Distinguishing a Klipper Crash from an OS Crash

Before diving into hardware diagnostics, first confirm whether the crash affected the entire SBC or just the Klipper host software. If you can still connect to the SBC via SSH after a print failure, the issue was likely limited to the Klipper service.

You can check the Klipper service’s status using journalctl. This command shows the last 50 log entries for the klipper service:

journalctl -u klipper.service -n 50 --no-pager

-

If you see error messages followed by lines indicating the service stopped and then started again, the Klipper host crashed, but the OS recovered it.

-

If the log simply ends without any error or shutdown message, it strongly indicates the entire system went down abruptly.

For more context, check the system-wide logs for other error messages around the time of the failure:

# Check the kernel ring buffer for hardware or driver errors

journalctl -k --no-pager

An abrupt end to all logs is the classic sign of a hard power loss or system freeze.

Reproducing Crashes with Stress Tests

To confirm a hardware instability issue (power, thermal, or I/O), you can stress-test the system to try and trigger the crash under controlled conditions. This should only be done while the printer is idle or even disconnected from the SBC. The sysbench utility is excellent for this.

-

Install

sysbenchsudo apt update sudo apt install sysbench -

Run Stress Tests

It’s highly effective to run multiple tests simultaneously in separate SSH sessions. This maximizes the load on the system. While the tests are running, open another SSH session to watch the kernel log live for any error messages.

Note:

Note:- Depending on your SBC’s specifications (CPU cores, memory, SD card space), you may need to adapt the test parameters.

- Refer to the sysbench man page for details on its settings.

- For thermal stress testing, you can increase the

--timevalue for the CPU test.

-

Live Kernel Log (Session 1): Watch for undervoltage warnings, I/O errors, or other critical messages

sudo dmesg -wH -

CPU Stress Test (Session 2): This test maxes out all CPU cores, increasing power draw and heat. Adjust

--threadsto match the number of cores on your SBC.# This will run for 2 minutes (120 seconds) sysbench cpu --cpu-max-prime=20000 --threads=4 --time=120 run -

Memory Stress Test (Session 3): This test stresses the memory and memory controller.

sysbench memory --memory-block-size=1K --memory-scope=global --memory-total-size=1G --memory-oper=write run -

I/O Stress Test (Optional - Session 4): This test stresses the SD card controller and the card’s read/write performance.

# First, prepare a 1GB test file sysbench fileio --file-total-size=1G prepare # Next, run a random read/write I/O test sysbench fileio --file-total-size=1G --file-test-mode=rndrw --threads=4 run # Finally, clean up the test file sysbench fileio --file-total-size=1G cleanup

Important:

Important:- Stress testing the SD card causes considerable wear.

- SD cards that are already worn or pre-damaged may fail completely.

If your system reboots or freezes during these tests, you have almost certainly confirmed an underlying hardware instability.

Deeper Diagnostics with Persistent Logging

By default, system logs are often stored in volatile memory and are lost on reboot. You can configure the system to save logs to the SD card, making them “persistent” across reboots. This allows you to investigate the cause of a crash after the system comes back online.

Note:

- This is not guaranteed to capture the crash event. Depending on the severity of the crash, the system may not have time to write the final log entries to the SD card.

- The below is quite generic and can heavily depend on the Linux distribution, software versions, and other factors. Potentially, a deeper online investigation is needed to determine the proper setup.

- Persistent logging adds to wear of the SD card. Use only for troubleshooting purposes.

- Other advanced diagnostic tools, like

watchdogor hardware serial debugging, are beyond the scope of this article.

-

Enable Persistent Logging:

These commands create a directory for the journal logs and tell the logging service (

systemd-journald) to use it.sudo mkdir -p /var/log/journal sudo systemd-tmpfiles --create --prefix /var/log/journal sudo systemctl restart systemd-journald -

Verify Persistence:

Edit the journal configuration file to ensure persistence is explicitly set.sudo nano /etc/systemd/journald.confFind the

#Storage=line, uncomment it (remove the #), and set it to persistent. It should look like this:Storage=persistentSave the file (Ctrl+X, Y, Enter).

-

Reboot the SBC for the change to take full effect.

-

Review Previous Boot Logs:

After a crash and subsequent reboot, you can view the complete log from the previous boot using the -b -1 flag. This is invaluable for seeing the final log messages leading up to the crash.

journalctl -b -1 --no-pager